Lucas Tabelini

I am currently a M.Sc. student in Computer Science at UFES. I conduct my research in LCAD, the

laboratory where the IARA

self-driving

car is developed. My advisor is Prof. Thiago Oliveira-Santos.

Prior to my M.Sc., I obtained my B.Sc. degree in Computer Engineering at the same university.

My current research areas of interest include autonomous driving, computer vision, and deep learning.

Publications

|

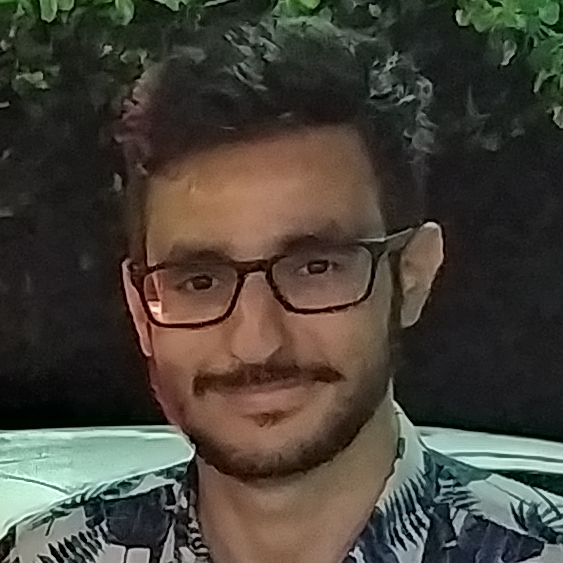

Keep your Eyes on the Lane: Real-time Attention-guided Lane DetectionConference on Computer Vision and Pattern Recognition (CVPR), 2021A novel state-of-the-art lane detection model for autonomous vehicles that is more accurate and faster than existing ones. Show abstractModern lane detection methods have achieved remarkable performances in complex real-world scenarios, but many have issues maintaining real-time efficiency, which is important for autonomous vehicles. In this work, we propose LaneATT: an anchor-based deep lane detection model, which, akin to other generic deep object detectors, uses the anchors for the feature pooling step. Since lanes follow a regular pattern and are highly correlated, we hypothesize that in some cases global information may be crucial to infer their positions, especially in conditions such as occlusion, missing lane markers, and others. Thus, this work proposes a novel anchor-based attention mechanism that aggregates global information. The model was evaluated extensively on three of the most widely used datasets in the literature. The results show that our method outperforms the current state-of-the-art methods showing both higher efficacy and efficiency. Moreover, an ablation study is performed along with a discussion on efficiency trade-off options that are useful in practice. Lucas Tabelini, R. Berriel, T. M. Paixão, C. Badue, A. F. De Souza, T. Oliveira-SantosarXiv CVF Github |

|

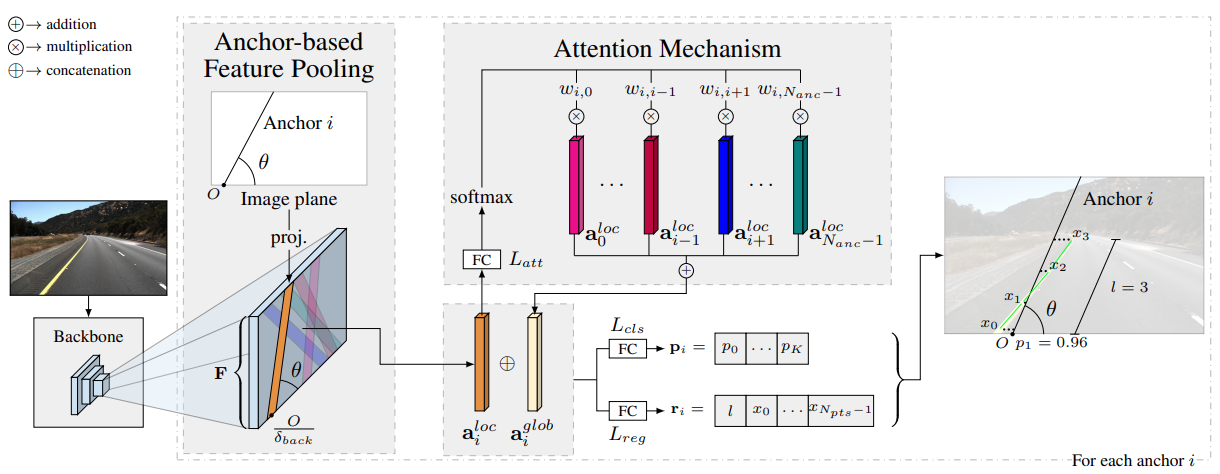

Deep traffic light detection by overlaying synthetic context on arbitrary natural imagesComputers & Graphics, 2021A method to synthetically generate training data for deep traffic light detectors using non-realistic computer graphics. Show abstractDeep neural networks come as an effective solution to many problems associated with autonomous driving. By providing real image samples with traffic context to the network, the model learns to detect and classify elements of interest, such as pedestrians, traffic signs, and traffic lights. However, acquiring and annotating real data can be extremely costly in terms of time and effort. In this context, we propose a method to generate artificial traffic-related training data for deep traffic light detectors. This data is generated using basic non-realistic computer graphics to blend fake traffic scenes on top of arbitrary image backgrounds that are not related to the traffic domain. Thus, a large amount of training data can be generated without annotation efforts. Furthermore, it also tackles the intrinsic data imbalance problem in traffic light datasets, caused mainly by the low amount of samples of the yellow state. Experiments show that it is possible to achieve results comparable to those obtained with real training data from the problem domain, yielding an average mAP and an average F1-score which are each nearly 4 p.p. higher than the respective metrics obtained with a real-world reference model. J. P. V. de Mello, Lucas Tabelini, R. Berriel, T. M. Paixão, A. F. De Souza, C. Badue, N. Sebe, T. Oliveira-SantosarXiv ScienceDirect |

|

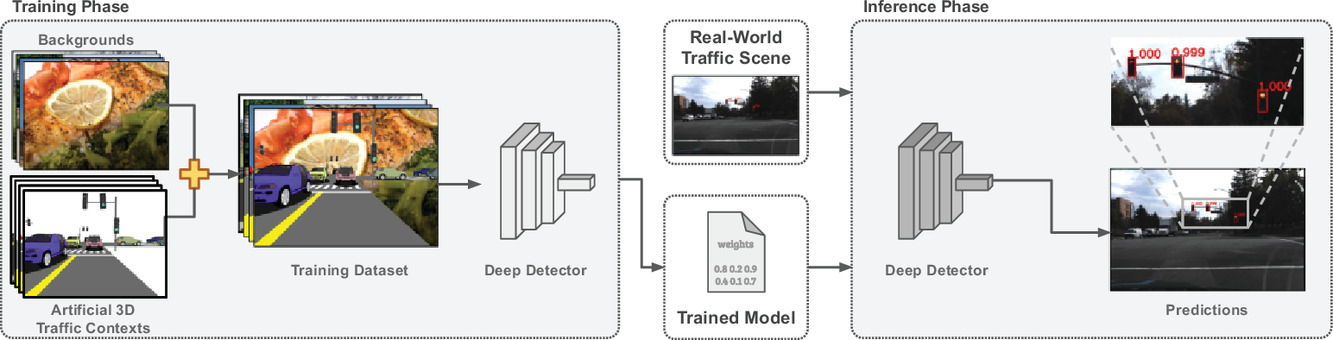

PolyLaneNet: Lane Estimation via Deep Polynomial RegressionInternational Conference on Pattern Recognition (ICPR), 2020A simple and fast lane detection method on deep polynomial degression. Show abstractOne of the main factors that contributed to the large advances in autonomous driving is the advent of deep learning. For safer self-driving vehicles, one of the problems that has yet to be solved completely is lane detection. Since methods for this task have to work in real-time (+30 FPS), they not only have to be effective (i.e., have high accuracy) but they also have to be efficient (i.e., fast). In this work, we present a novel method for lane detection that uses as input an image from a forward-looking camera mounted in the vehicle and outputs polynomials representing each lane marking in the image, via deep polynomial regression. The proposed method is shown to be competitive with existing state-of-the-art methods in the TuSimple dataset while maintaining its efficiency (115 FPS). Additionally, extensive qualitative results on two additional public datasets are presented, alongside with limitations in the evaluation metrics used by recent works for lane detection. Finally, we provide source code and trained models that allow others to replicate all the results shown in this paper, which is surprisingly rare in state-of-the-art lane detection methods. Lucas Tabelini, R. Berriel, T. M. Paixão, C. Badue, A. F. De Souza, T. Oliveira-SantosarXiv IEEE Xplore Github |

|

Deep Traffic Sign Detection and Recognition Without Target Domain Real ImagesarXiv preprint, 2020An effortless method for generating training data for deep traffic sign detectors (extension for the IJCNN'19 paper). Show abstractDeep learning has been successfully applied to several problems related to autonomous driving, often relying on large databases of real target-domain images for proper training. The acquisition of such real-world data is not always possible in the self-driving context, and sometimes their annotation is not feasible. Moreover, in many tasks, there is an intrinsic data imbalance that most learning-based methods struggle to cope with. Particularly, traffic sign detection is a challenging problem in which these three issues are seen altogether. To address these challenges, we propose a novel database generation method that requires only (i) arbitrary natural images, i.e., requires no real image from the target-domain, and (ii) templates of the traffic signs. The method does not aim at overcoming the training with real data, but to be a compatible alternative when the real data is not available. The effortlessly generated database is shown to be effective for the training of a deep detector on traffic signs from multiple countries. On large data sets, training with a fully synthetic data set almost matches the performance of training with a real one. When compared to training with a smaller data set of real images, training with synthetic images increased the accuracy by 12.25%. The proposed method also improves the performance of the detector when target-domain data are available. Lucas Tabelini, R. Berriel, T. M. Paixão, A. F. De Souza, C. Badue, N. Sebe, T. Oliveira-SantosarXiv |

|

Effortless Deep Training for Traffic Sign Detection Using Templates and Arbitrary Natural ImagesInternational Joint Conference on Neural Networks (IJCNN), 2019An effortless method for generating training data for deep traffic sign detectors. Show abstractDeep learning has been successfully applied to several problems related to autonomous driving. Often, these solutions rely on large networks that require databases of real image samples of the problem (i.e., real world) for proper training. The acquisition of such real-world data sets is not always possible in the autonomous driving context, and sometimes their annotation is not feasible (e.g., takes too long or is too expensive). Moreover, in many tasks, there is an intrinsic data imbalance that most learning-based methods struggle to cope with. It turns out that traffic sign detection is a problem in which these three issues are seen altogether. In this work, we propose a novel database generation method that requires only (i) arbitrary natural images, i.e., requires no real image from the domain of interest, and (ii) templates of the traffic signs, i.e., templates synthetically created to illustrate the appearance of the category of a traffic sign. The effortlessly generated training database is shown to be effective for the training of a deep detector (such as Faster R-CNN) on German traffic signs, achieving 95.66% of mAP on average. In addition, the proposed method is able to detect traffic signs with an average precision, recall and F1-score of about 94%, 91% and 93%, respectively. The experiments surprisingly show that detectors can be trained with simple data generation methods and without problem domain data for the background, which is in the opposite direction of the common sense for deep learning. Lucas Tabelini, T. M. Paixão, R. Berriel, A. F. De Souza, C. Badue, N. Sebe, T. Oliveira-SantosarXiv IEEE Xplore Github |

|

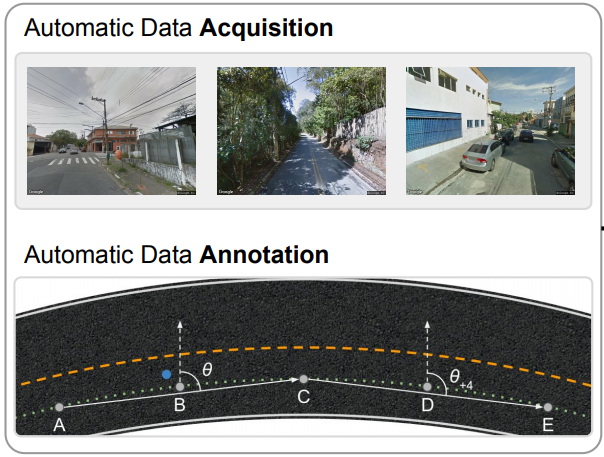

Heading Direction Estimation Using Deep Learning with Automatic Large-scale Data AcquisitionInternational Joint Conference on Neural Networks (IJCNN), 2018A model to estimate heading direction for autonomous vehicles or ADAS trained using automatically acquired data. Show abstractAdvanced Driver Assistance Systems (ADAS) have experienced major advances in the past few years. The main objective of ADAS includes keeping the vehicle in the correct road direction, and avoiding collision with other vehicles or obstacles around. In this paper, we address the problem of estimating the heading direction that keeps the vehicle aligned with the road direction. This information can be used in precise localization, road and lane keeping, lane departure warning, and others. To enable this approach, a large-scale database 1+ million images) was automatically acquired and annotated using publicly available platforms such as the Google Street View API and OpenStreetMap. After the acquisition of the database, a CNN model was trained to predict how much the heading direction of a car should change in order to align it to the road 4 meters ahead. To assess the performance of the model, experiments were performed using images from two different sources: a hidden test set from Google Street View (GSV) images and two datasets from our autonomous car (IARA). The model achieved a low mean average error of 2.359° and 2.524° for the GSV and IARA datasets, respectively; performing consistently across the different datasets. It is worth noting that the images from the IARA dataset are very different (camera, FOV, brightness, etc.) from the ones of the GSV dataset, which shows the robustness of the model. In conclusion, the model was trained effortlessly (using automatic processes) and showed promising results in real-world databases working in real-time (more than 75 frames per second). R. Berriel, Lucas Tabelini, V. B. Cardoso, R. Guidolini, C. Badue, A. F. De Souza, T. Oliveira-SantosIEEE Xplore |

Academic Services

Reviewer for:- Transactions on Pattern Analysis and Machine Intelligence (T-PAMI)

- Transactions on Image Processing (T-IP)

- Transactions on Intelligent Transportation Systems (T-ITS)

- International Conference on Robotics and Automation (ICRA)